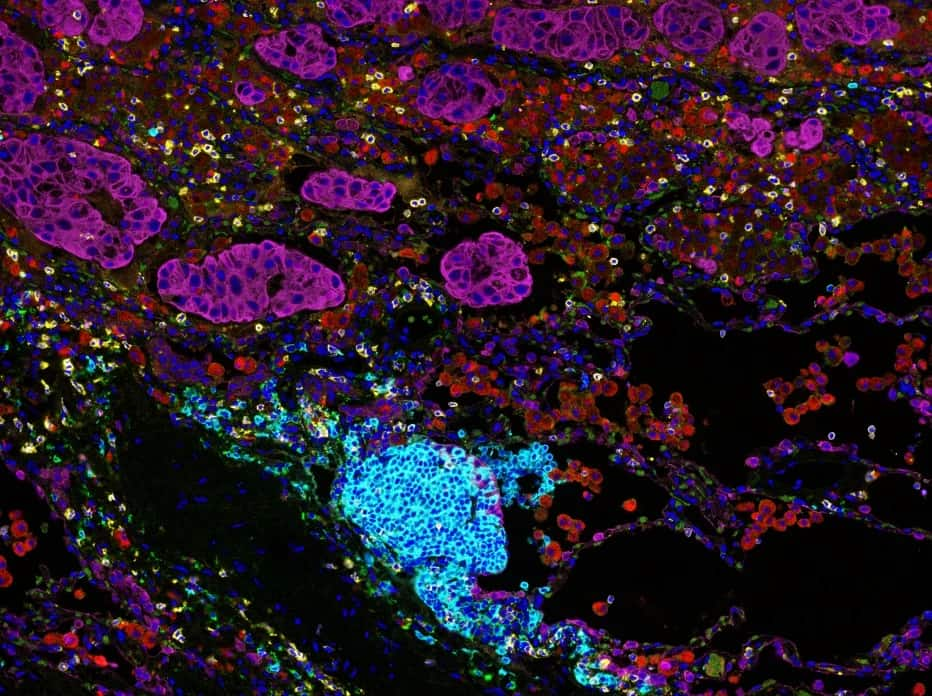

Spatial Omics Cell Segmentation and Signal Extraction

Project Objective:

To engineer a high-accuracy cell segmentation model leveraging a ResNet50 backbone coupled with a feature pyramid to effectively identify cancerous cells, aiding in early detection and diagnosis.

Key Contributions:

In this project, I engineered a cell segmentation model utilizing the ResNet50 backbone integrated with a feature pyramid network. This model significantly contributed to the accurate identification of cancerous cells, thereby enhancing diagnostic capabilities and research outcomes. I also utilized multi-GPU setups to expedite model training and manage extensive datasets efficiently.

Skills Acquired:

Throughout this project, I advanced my skills in implementing deep learning models for medical imaging and cell segmentation, gained proficiency in using ResNet50 and feature pyramid networks for complex image analysis tasks, and experienced working with large datasets while optimizing computational resources through multi-GPU configurations. Additionally, I deepened my knowledge in cancer research and the application of machine learning in biomedical diagnostics.

Neural Network based Flow Cytometry Gating Automation

Project Objective:

To develop a neural network model capable of automating the gating process in high-dimensional Flow Cytometry data, addressing class imbalance issues to improve the accuracy and reliability of the analysis.

Key Contributions:

In this project, I designed and implemented a multi-label, multi-class neural network classification model tailored for high-dimensional Flow Cytometry data. By deploying robust class-balancing strategies, I achieved an impressive F1 score of 0.97. To optimize model performance and efficiency, I utilized multi-GPU setups, enabling faster training and handling of large-scale data.

Skills Acquired:

Through this project, I acquired advanced proficiency in developing and fine-tuning neural network architectures, gained hands-on experience with multi-GPU environments and large-scale data processing, and enhanced my domain understanding of Flow Cytometry data and its applications in biomedical research. I also developed expertise in class-balancing techniques and performance evaluation metrics.

EHR Medical Notes parsing using Natural Language Processing

Project Objective:

The primary goal of this project was to develop a system capable of parsing medical notes from Electronic Health Records (EHR). The objective was to accurately extract key medication information about patients, aiming to standardize the process and significantly reduce the risk of medication errors. This initiative was vital in enhancing patient safety and improving healthcare delivery efficiency.

Key Contributions:

I spearheaded the creation of a comprehensive corpus of annotated medical texts, collaborating closely with EHR data specialists and physicians. This corpus was enriched by integrating features from the Unified Medical Language System (UMLS) and SNOMED Clinical Terms (SNOMED CT), thereby enhancing its robustness and relevance. I conducted an extensive literature review of existing solutions in this domain, setting a solid foundation for our approach. Subsequently, I benchmarked the performance against current state-of-the-art solutions. One of my key achievements was developing a custom Named Entity Recognition (NER) model. This model was specifically tailored to analyze and extract critical patient data from medical texts. Impressively, the model achieved an F1 score of 0.93, surpassing the benchmarks set by current state-of-the-art solutions.

Skills Acquired:

Through this project, I significantly enhanced my understanding of Electronic Health Records, delving deep into various aspects, including the Fast Healthcare Interoperability Resources (FHIR) standards and the Health Insurance Portability and Accountability Act (HIPAA) regulations. This comprehensive knowledge base not only sharpened my technical skills but also equipped me with the necessary expertise to navigate the complexities of healthcare data, ensuring compliance and security in all aspects of my work. My experience in this project has been instrumental in shaping my approach to healthcare data analysis and has been a cornerstone in my professional development as a Data Scientist.

Photographic Wound Assessment Tool (PWAT)

Project Objective:

This project focuses on applying advanced machine and deep learning techniques to evaluate the healing of chronic wounds using images captured by smartphones. The aim is to develop a method that allows for efficient and accurate tracking of wound healing progress remotely.

Key Contributions:

I successfully led the implementation and deployment of a sophisticated machine learning system, integrating multiple deep learning modules for rapid, detailed wound analysis with subsecond latency. My work included conducting extensive assessments of model accuracy and predictive uncertainty across a diverse array of images in our wound dataset. I innovated a regression model to predict the certainty of new wound image analyses, significantly boosting predictive reliability. Additionally, I conducted advanced correlation studies to link image attributes with classification uncertainty and developed an uncertainty feedback module, enhancing the system's user interface for better decision-making. Furthermore, I orchestrated and evaluated live testing scenarios, employing kappa metrics to validate the agreement between real-time predictions and established ground truths, ensuring the system's efficacy and precision in practical applications.

Skills Acquired:

I gained substantial experience in computer vision implementation, particularly in developing projects from scratch with a strong focus on deep learning techniques. This not only deepened my technical expertise but also broadened my understanding of practical applications. Additionally, I acquired specialized expertise in integrating user feedback mechanisms into machine learning systems. This aspect of my work emphasized the importance of adaptability and a user-centric approach in technology development, ensuring that the systems I work on are not just technically sound but also highly user-friendly. Lastly, I demonstrated my proficiency in scalability and deployment, successfully scaling applications to accommodate a large user base. This experience honed my abilities to ensure robust performance and reliability of the applications in real-world settings, a critical aspect of technology deployment in any sector.

Digital Content Recommendation System

Project Objective:

The project aimed to build a recommender system for Sadhguru's diverse content, providing personalized recommendations to user queries, thereby enhancing engagement with his extensive repository of videos, podcasts, and blogs.

Key Contributions:

Employing a retrieve and re-rank strategy powered by language models, the system efficiently addressed natural language queries with specific content recommendations in near-real-time. The designed system supported 14 popular languages and provided multimedia recommendations, catering to Sadhguru's global following. I developed a low-latency content recommendation engine for the Isha Foundation using MiniLM, achieving a response time of 2 seconds and a throughput of 100 requests per second, ensuring prompt and relevant user engagement.

Skills Acquired:

This project enhanced my expertise in building recommender systems, natural language processing using MiniLM, and optimizing backend systems for low-latency and high-throughput performance, alongside gaining insights into user-friendly UI design for improved user experience.

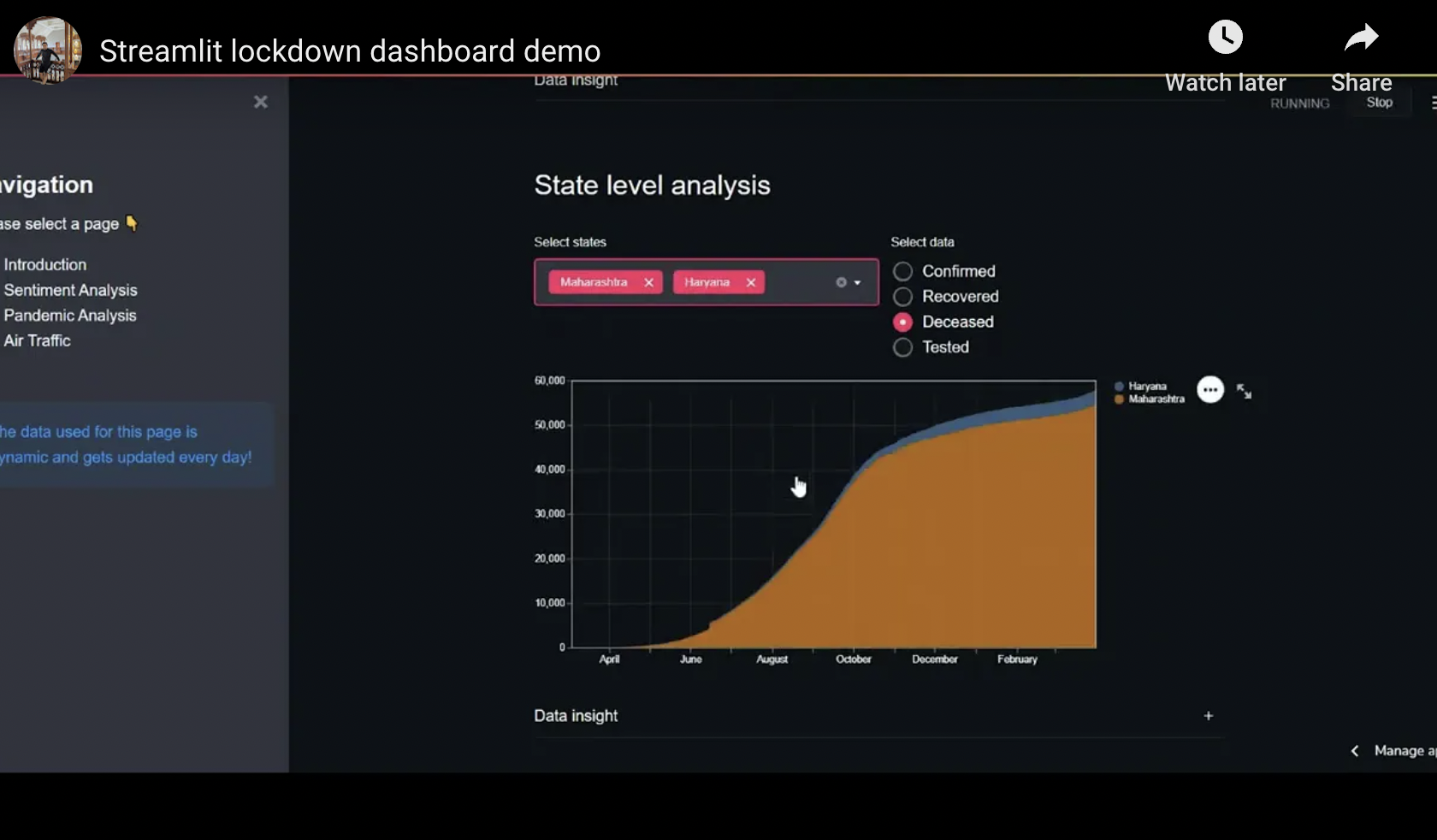

Real-Time COVID-19 Impact Dashboard: Narrating India's Lockdown Journey

Project Objective:

On the commemoration of the one-year lockdown in India due to COVID-19 in March 2021, I embarked on a personal project to harness my data storytelling skills. The aim was to create a dynamic dashboard that not only showcased the impact of the lockdown on various facets of life in India but also served as a live dashboard for analyzing ongoing COVID-19 cases and recoveries.

Key Contributions:

I developed a comprehensive dashboard using Streamlit and hosted it to provide real-time insights. The dashboard featured dynamic plots of time-series data capturing death tolls, affected individuals, and recovered patients. It was equipped with filters for states, times, and lockdown phases to allow a granular analysis. To gauge public sentiment, I built a Twitter scraper to accumulate a year's worth of tweets regarding the lockdown in India. Utilizing a pre-existing sentiment analysis model, possibly a model like BERT (Bidirectional Encoder Representations from Transformers) or VADER (Valence Aware Dictionary and sEntiment Reasoner), I assessed the citizens' sentiments towards the lockdown. Additionally, I explored publicly available data from the Airport Authority of India to visualize the decline in flights and cargo during the lockdown period. The idea was to narrate a data-driven story of how the lockdown had pervasively impacted India, while also providing a live dashboard for current COVID-19 data analysis. The COVID-19 data was refreshed every time the dashboard ran, ensuring the information displayed was current.

Skills Acquired:

This project significantly honed my skills in data storytelling, real-time data visualization, and sentiment analysis. It allowed me to delve deeper into Streamlit for dashboard creation, enhancing my ability to present data in an interactive and user-friendly manner. The process of building a Twitter scraper and employing sentiment analysis enriched my understanding of natural language processing and sentiment analysis tools. Moreover, analyzing publicly available flight and cargo data provided a practical experience in data analysis and interpretation, further sharpening my ability to derive insights from varied data sources and present them coherently to an audience.

Automated Answer Paper Correction

Project Objective:

Aimed to automate the written paper evaluation process to address a bottleneck in the education system, ensuring a faster, transparent, and unbiased assessment system.

Key Contributions:

Developed a novel scoring algorithm to compare diverse student answers to a fixed model answer. Engineered an automated platform using OCR, image processing, and BERT language models for digitizing and assessing written answers, backed by a robust cloud infrastructure for high throughput and low latency. Created an intuitive UI that generated comprehensive feedback reports for students, fostering a conducive learning environment. The project culminated in a research paper presented at the IEEE Conference on Innovation in IoT, Robotics, and Automation 2022.

Skills Acquired:

Enhanced skills in algorithm development, OCR, image processing, and BERT language models, alongside gaining experience in cloud computing and high-throughput, low-latency systems. The opportunity to present at an IEEE conference also improved my ability to communicate complex technical ideas effectively.

Dynamic Fleet Optimization and Real-time Monitoring System

Project Objective:

The goal was to craft a Dynamic Fleet Optimization and Real-time Monitoring System for a global logistics firm, enabling smart tanker allocation to efficient ports based on real-time data, thus boosting operational efficiency across 47,000 containers and 160+ ships.

Key Contributions:

I developed a core optimization algorithm on Microsoft Azure, dynamically directing tankers based on real-time data, demand forecasts, and port competition analysis. Additionally, I created a real-time monitoring dashboard using Power BI for visual insights into fleet positions and port competition levels. Moreover, I implemented Azure Functions for continuous data retrieval and designed machine learning models for demand forecasting and competition analysis, updated weekly to align with business decision-making cycles.

Skills Acquired:

Through this project, I mastered cloud computing and serverless architectures using Microsoft Azure and Azure Functions, enhancing real-time data processing and analysis. I also advanced my data visualization proficiency by creating interactive dashboards using Power BI. Furthermore, I gained substantial experience in predictive analytics through the implementation and maintenance of machine learning models for demand forecasting and competition analysis.

Algo360 : Click here for Demo Link

Project Objective:

Contributed to the development of a Credit Scoring Model for Algo360 with the aim of enhancing lending decision accuracy. This entailed processing over 50 million customer IDs and identifying more than 230 million banking domain incidents, thereby fostering financial inclusion within the Indian financial ecosystem.

Key Contributions:

I played a critical role in elevating the efficiency of the solution by halving the overall turnaround time on AWS Lambda. This improvement was brought about by identifying bottlenecks and refining the ensemble models used for credit scoring. Additionally, my endeavors in feature engineering and pinpointing better data entities significantly boosted the model's predictive accuracy. I also enriched the domain set for customer profiling by incorporating e-wallet and online shopping segments, thus providing a more comprehensive view of an individual’s financial behavior and facilitating more accurate credit assessments.

Skills Acquired:

This project sharpened my expertise in big data processing, machine learning optimization using ensemble models, and feature engineering. Engaging with AWS Lambda also enhanced my skills in cloud computing, laying a solid foundation for addressing similar large-scale data processing and analytics challenges in future endeavors.

Integrated Sales Forecasting and Analytics Dashboard

Project Objective:

The main aim was to design distinct sales forecasting models for a private equity firm that acquires and optimizes third-party Amazon businesses. By integrating sales and marketing data, the project sought to provide accurate sales forecasts for multiple brands, thereby aiding in the data-driven global expansion of quality consumer goods brands.

Key Contributions:

I developed tailored sales forecasting models for each brand, synthesizing sales data with marketing metrics. Given the time-series nature of sales data, I employed ARIMA (AutoRegressive Integrated Moving Average) for baseline forecasting. Additionally, regression analysis was utilized to gauge the impact of marketing campaigns and other external factors, like the COVID-19 pandemic, on sales. A comprehensive dashboard was designed to visualize the predicted baseline, sales trends, and key performance indicators (KPIs) from marketing efforts. This dashboard amalgamated data from various sources, providing a cohesive view of how marketing activities and other factors influenced sales, thereby enabling informed decision-making.

Skills Acquired:

Through this project, I honed my expertise in time-series analysis and regression modeling, providing a solid foundation for tackling complex data synthesis problems. The design and development of a comprehensive dashboard enhanced my data visualization skills, giving me a deeper understanding of how to present data in a meaningful and insightful manner. The challenge of incorporating external factors, such as the impact of COVID-19 and marketing campaigns, into the sales forecasting model, provided valuable experience in improving model robustness and adapting to real-world data complexities.